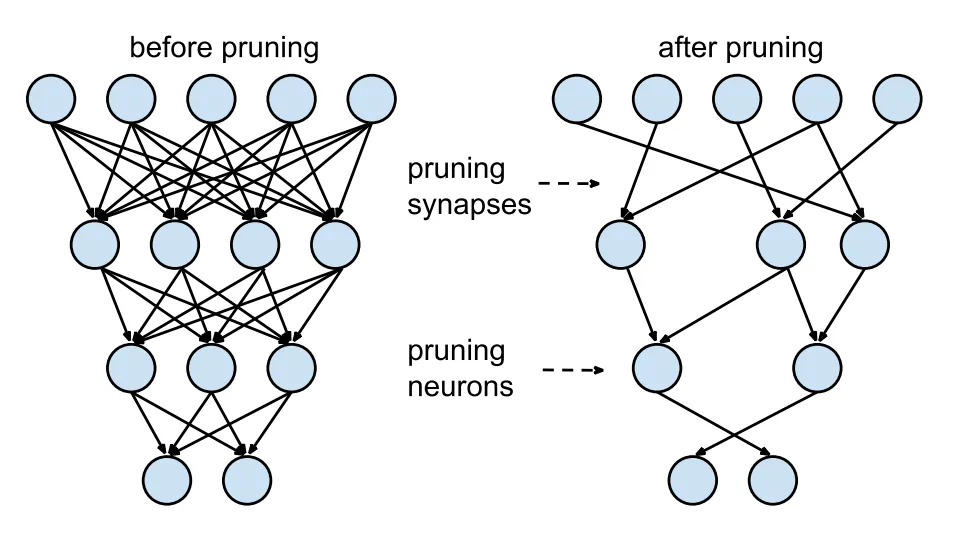

Model pruning reduces the size and complexity of neural networks by eliminating redundant parameters, which decreases computational costs and memory usage, making models more efficient for deployment on resource-constrained devices. To this aim, the team introduced a task-agnostic approach to pruning vision-language models: Multimodal Flow Pruning (Multiflow), which allows pruning only once while maintaining transferability to arbitrary downstream tasks, enabling efficient transfer to various downstream tasks without re-pruning.